Engineers invent, design, analyze, build, test and maintain complex physical systems, structures, and materials to solve some of societies most urgent problems, but also to improve the quality of life of individuals. Engineering is artifact-centered and concerned with realizing physical products of all shapes, sizes, and functions. At intengineering I am motivated by the quest to empower these engineers to cope with the ever increasing complexity of the systems they have to provide. I provide science and innovation based reports, thoughts, ideas, and interesting news – informal, but current – thought provoking and open.

Contribution and Innovation in Scientific Research

In our journal Advanced Engineering Informatics, we ask authors to summarize their contribution with the submission of their work. Implementing this additional step during submission was required for us to deal with the exploding amount of submissions we are receiving. We use the summary extensively in our editorial screening process to decide whether papers submitted are within scope or not.

Analyzing the replies we received in recent years, I realized that it is quite difficult for submitting authors to distinguish between a claim to a general innovation and a scientific contribution. We realized that most of the authors simply summarize their innovations in their replies.

I believe that a contribution is more than a simple innovation. I think the word contribution points more towards adding to an existing body of knowledge. Innovation points more towards the new without looking at what exists. I think an innovation also points more towards a tangible product, in the form of a method or artifact. While ‘contribution’ seems to point more towards some intangible novelty – in the form of new knowledge for a specific community.

We use the word contribution in scientific contexts, as we believe in the step-by-step advancement of knowledge within a scientific community. We believe in scientific advancement and on standing on the ‘shoulder of giants’. To claim a contribution, requires to carefully sketch the community’s previous work as a line of subsequent progression. Where the stress is on a continuous line and not on a lose assemblage of prior work that does in itself not really build on each other. Of course, an argument for a contribution also needs to include the ‘newly presented’, but it needs to explain what prior work the ‘newly presented’ is building upon. The argument then needs to stress an advancement of this previous work.

But why is it important? Isn’t the new more important than the old and shouldn’t be the focus on the new? I truly believe that both should be the case, because of an important part of scientific work: Community building. The scientific world has exploded in the last decades. Even a few decades ago, when I started working in science communities were small group of people that worked on specific topics. Everybody knew each other personally and met regularly on specific small scale conference. By now the amount of people working in any one field that I am involved in has exponentially increased, so has of course the amount of the work that is published. While just two-three decades ago, it was easily possible for informed readers to understand the community of an author and grasp the streams in the literature an author were influenced by in their work, this is no longer easily possible. Hence, to maintain scientific communities, explicit arguments about how work builds upon previous streams becomes more an more important.

As an editor of a journal, community building is maybe the main important aspect for ensuring success. Every journal relies upon the scientists that strongly identify with the journal’s scope, its aims, and its goals. These scientists come back to the journal as readers and authors as they do expect consistency within the evolving streams of debates of the journal. The scientists also expect that new authors submitting work to the journal, understand the streams that the journal has developed previously. Each new submission should be an effort to integrate within the community. Once such a consistency is no longer existing, journals will lose their purpose and work can be simply published in overarching outlets with the mere goal of simply publishing as much work as possible, such as our pre-print repositories. Not sure if this would be good for scientific advancement.

Ontology as key for formalizing engineering knowledge

“But logic itself has no vocabulary for describing the things that exist. Ontology fills that gap: it is the study of existence, of all kinds of entities — abstract and concrete — that make up the world. It supplied the predicates of predicate calculus and the labels that fill the boxes and circles of conceptual graphs”

Sowa – Knowledge Representation, Logical, Philosophical and Computational Foundations

I just wanted to share the above quote from what I often refer to as the bible of advanced engineering informatics or of anybody in any domain who tries to support domain specialists with computational methods.

In my personal thinking this quote is essential and it might be helpful to clearly understand the message my colleague Amy Trappey and I tried to make in our recent publication.

If you are working in the field and you can get your hands on a copy of Sowa … at least for me it was tremendously helpful to explicitly understand what I am working on in my research and teaching.

Story telling during scientific writing

One of the most important ingredients of a great scientific paper is its story line. Something that allows a reader to pick up your paper and that allows you to stay captivated while reading the entire paper from start to end. I personally believe that a story line makes or breaks a good scientific paper. So it does make sense to reflect a little more about how one can develop such a story line. Of course in scientific writing the story line needs to focus on developing an argument for a scientific contribution that contrasts with prior work, however, such a story line is important to successful publish a paper that others would enjoy reading.

When one reads interviews of famous fiction writers one of the question most often asked is about how the writer developed the idea for their latest book. Below are two examples:

I was having a conversation with friends on WhatsApp, and we were joking around about how we grew up, and the conversation turned to, of all things, how we would tend to goats in the future, and one friend was like, you know they eat everything, and the story dropped into my head, fully formed. I literally told them, got to go, just had a story idea, must go write it down! Eight hours later, Tantie and the Farmhand were born. The name, Tantie Merle, will be familiar to anyone from the West Indies, as it is also an homage to a character created by the legendary West Indian oral storyteller, Paul Keens-Douglas.

R.S.A. Garcia in Uncanny Magazine

I had the idea for this story several years ago. I was reading The Rose and the Briar, the book on American ballads that Sean Wilentz and Greil Marcus edited, and I had the idea of writing a song that was a story that was a murder ballad. It’s been on my to-do list as “murder ballad story” since 2014 or 2015. I had played with an old-time song in my story “Wind Will Rove” but I wanted to try something else with this one.

Then I had to look up the lyrics for something one day, and I went to the lyric website Genius, and that particular song was just covered with comments and interpretations and back-and-forth between commenters. The song was a modern version of a traditional ballad, but one comment was talking about how a verse was about a psychedelic trip, and then a whole bunch of other people had given the comment thumbs down because the comment assumed the song was written by the Grateful Dead when it predated them by centuries. I realized what I wanted to do was write a murder ballad and place it in history, and then have a bunch of internet song critics have their way with it, some accurately and some way off-base. That would allow me to present the song but also focus on both the specificities and the vagaries of the lyrics, the different interpretations, and the ways that various versions could change the meaning and purpose of the song. I wrote the main lyrics first, then started figuring out personalities for my characters and how they would each approach the lyrics, then adding and deleting and moving verses and comments as needed.

Sarah Pinsker in Uncanny Magazine

From these quotes it seems as if the idea for a story did come from a myriad of different sources, such as discussions with other people, the reading of secondary texts, or even inspirations from other forms of art, such as music. It also seems as if the ‘story just dropped into the author’s head’ after some time. However, it also seems that just sitting down and writing the story without the initial ideas for it does seem hardly possible. The story line needs to be developed as an idea before the writing process itself can start.

In scientific writing, developing a story line is a process that might to still need to come after the scientific study is finalized and before the actual writing process can start. Probably during this process it makes sense to discuss a lot with friends and colleagues, re-read the seminal texts that triggered the initial study, and maybe read also extant literature (all while listening to music?). Once a story line is clear the writing process can the entirely focus on communicating the story as strongly as possible to readers!

How engineering designers talk

In a recent study, my colleague Dr. Lucian Ungureanu analyzed the details of how engineering designers communicate with each other. Lucian closely looked at the language designers used in two recorded design meetings that were concerned with the design of a crematorium . To this end, he applied a text mining technique called n-grams, that identify sequences of words (with length n) that are frequently used within a specific communication event. For example, during the two design meetings of the crematorium, participants used the 4-gram ‘it would be nice’ 17 times.

Looking at the n-grams Lucian found that many of the most frequently used ones, are used by designers to express ambiguity and vagueness. For example, ambiguous and vague statements. For example, the 6-gram ‘it would be nice to have’ points towards a vague statement as it also opens possibilities to alternative choices. Instances of vague 2-grams would be, for example, ‘bit bigger’ or ‘additional space. Both of these 2-grams do only point towards an extension, but do not point to the precise magnitude of the extension.

One conclusion that can be drawn from the relative frequency of ambiguous and vague n-grams is that designers use such ambiguous and vague phrases to indicate important ‘design acts’ – statements and discussions within meetings that lead to crucial design changes or new innovative design ideas. The findings also point to the overall importance of ambiguous and vague statements during the evolution of design ideas and changes. They allow designers to suggest new avenues and angles without necessary specifying specific solutions already and invite others into the discussion.

Thinking about computational design tools, this research can provide many insights. Most importantly, the results point towards the need of computational design support tools that allow designers to include ambiguous and vague ideas – something that is, by large, not possible with existing tools yet. At the same time, it is probably possible to develop intelligent support agents that can detect ambiguous and vague statements and then provide dedicated design support during meetings, such as triggering specific visualizations or additional supporting information and data, on the fly, within meetings.

If you are interested in this research you can find the full paper here.

Modeling, abstraction, and digital twins

In our recently granted Horizon 2020 project Ashvin, we defined a digital twin as a digital replica of a building or infrastructure system together with possibilities to accurately simulate its multi-physics behaviour (think of structural, energy, etc.). Additionally, digital twins provide possibilities to represent all important processes around the building or infrastructure system throughout its product development lifecycle. These processes include evolving design information (as-designed vs. as-built) and an accurate description of all relevant construction, as well as, maintenance activities. The technical enabler for such digital twin representations is the internet of things (IoT) that allows establishing connections between real-time data coming from sensors and cameras as to establish a close correspondence of the physical entities and processes with their virtual representation. This definition is similar to the definitions provided by others, such as the one provided by for example Sacks et al. (2020) – https://doi.org/10.1017/dce.2020.16.

A closer look at these definitions, however, reveal the inherent danger of blurring technical possibilities with the realities of engineering design work and the constraints that are imposed on engineering by the boundaries of human cognition. The technical possibilities we have nowadays in terms of increasing the sophistication and depth of models representing products and their behavior is growing quickly. At the same time, we are more and more able to fuse and combine different data-driven and physics based models with each other in ways that would have not been computational possible just a number of years earlier. What is missing from technically driven definitions is however a clear focus on how an increase in model complexity can support engineers.

Reflecting some more, the notion of the ‘twin’ of the real world that exists in the digital might be ill chosen. After all, engineers establish models as simplified and highly abstracted representations of the reality so that they can cognitively deal with reality’s complexity. Instead of ‘accurately simulating the multi-physics behavior’ and ‘representing all important processes’, engineers are probably better supported by models that are supported by simplified simulations of the multi-physics behavior and by the representation of very few processes. Of course, all with the aim to support engineers within their limited cognitive abilities. But also from the utilitarian understanding that a simpler model that allows for similar understanding, is superior to a more complex model.

To account for this aspect, it might be appropriate to start technical research and development efforts from a solid and in depth empirical understanding of engineering work. From this understanding clear requirements can be derived, not only in terms, of what needs to be modeled, but equally important how abstract these models need to be for allowing engineers to still come to creative conclusions within their cognitive abilities.

To allow for such a development approach, the strategy we will follow therefore on Ashvin is a clear focus on how engineers can impact the productivity, resource efficiency, and safety of construction processes within different phases of the design and engineering life-cycle. From this we derived a number of very specific applications for supporting engineers (we call this the Ashvin tool kit) and drive all digital twin related development work based on the requirements for these applications. The next three years will show how successful we can be with such an approach to achieve our envisioned impact. The project’s website should be online soon at www.ashvin.eu.

cod and off-shore wind farm engineering

The complexity of engineering systems is thrilling and exciting and the factors that need to be accounted for during design. Few systems we engineer within the natural and built environment can be realized in isolation; most of them need to be designed accounting for many different influences in their environment.

The design and engineering of off-shore wind farms is a great example of this complexity. On the surface it seems rather simple to design and engineer off-shore wind parks, at least, in comparision to urban systems. After all, wind-parks are operated in isolation. Weather and water are influencing the structure, but though chaotic these processes are well understood from an engineering perspective. It seems as if little integration with other systems need to be considered.

Looking closer, however, many intriguing processes come to the fore that influence the design. For example, by now, it is well understood that the maintenance of off-shore wind farms needs to be accounted for and integrated already during the early design activities. One of our previous studies, for example, looks at health and safety conditions of these maintenance activities and the problems of reaching turbines in harsh weather conditions.

How to best design and engineer off-shore wind farms, however, does not stop here. For example, the design and choice of different possibilities for foundations influences how the marine ecosystem can develop and thrive within the parks. An interesting study of the Thünen institute, for example, showed that cod, a species of fish whose population collapse in recent years, can thrive in between the foundations of the off-shore wind-farms. Of course, how the wind farm foundation is designed can influence the development of the marine ecosystem that can thrive within the park.

Knowing the surroundings before renovating

To design, plan, and execute a building renovation, engineers need to not only understand the building itself, but also need to know quite some about the building’s environment. Knowledge about the environment is already required in the early stages of building condition assessment. For example, to plan drone flights round a building it is important to know whether there are trees of other objects around that would inhibit the drone flight. To evaluate different design options later on in the process with respect to the energy and occupant related performance knowledge about the local weather conditions or objects that might provide shading is required. During the execution one of the most important aspects that need to be accounted for is accessibility.

The above are only a couple of examples of the required knowledge. On our projects, more often than not, this knowledge is not systematically managed using our existing information models. To overcome this problem, in her research within the European BIM-Speed project Maryam Daneshfar explored the knowledge that engineers require to for renovating buildings. She formalized her findings in an ontology that is freely available here and discussed in a preliminary conference paper. I am sure publications about the ontology will soon follow in scientific journals. I will keep you posted.

Automated Text Mining to the Rescue of Knowledge Management

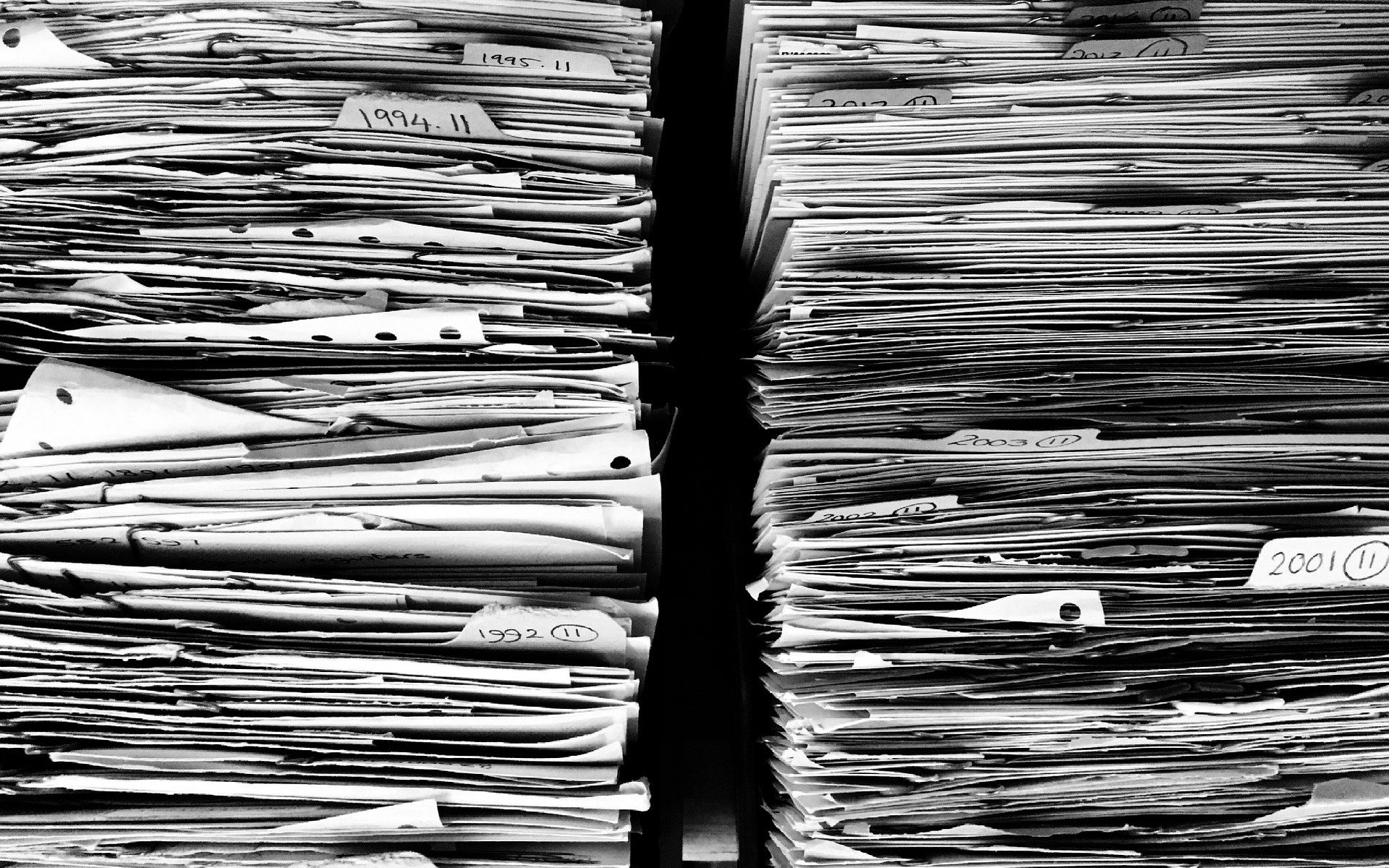

Over the years I have worked with and for a great number of large engineering and design companies operating globally. The secret towards successfully managing these companies evolves largely about strategies to accurately manage knowledge. As my colleague and good friend Amy Javernick-Will wrote, this knowledge can be categorized along two main lines: local market knowledge and global technical engineering knowledge (Javernick-Will and Scott 2010). I feel that most large design and engineering organizations have a good handle on managing the local knowledge by creating decentralized organizational structures and by having an extensive merger and acquisition strategy. However, I also feel that most of the companies I worked for still struggle to a certain extent with managing their global technical expertise. Maybe these struggles are most visible in the efforts to establish central knowledge management systems and trying to convince the majority of the workforce to use this systems to post knowledge, best practice studies, and establish global discussion networks around central technical topics of importance.

The main problem with these traditional platforms is that it requires extra efforts of employees to post, maintain entries, and to discuss. In a business that is still mainly oriented on billing hours to clients this is a difficult problem, as most employees are mainly concerned with selling their valuable work hours. This of course in particular holds for the experienced and knowledgeable people that hardly ever find time to support knowledge management tasks. At the same time, however, the large design and engineering organizations sit on a large gold mine of information that could be leveraged for establishing central knowledge management systems: Reports, Project Reviews, Internal Memos, and all type of other textual data. In recent years many studies have also shown the feasibility of automatically text mining these documents to establish automated knowledge categories, dedicated search engines, and knowledge networks that link important concepts. One of my most notable colleagues in this area is for example Nora El-Gohary that has shown the potential in a myriad of different studies. In some of our recent work we also have shown the potential for renovation projects. Commercially some early start-ups are also trying to leverage this idea, such as, for example the Berlin based Architrave. However, I believe that the true potential to leverage the power of automated text mining lies with the large design and engineering companies. The methods and algorithms exist, however, the key to successful implementations lies with the availability of large textual databases that only exist at these multi-national large scale corporations.

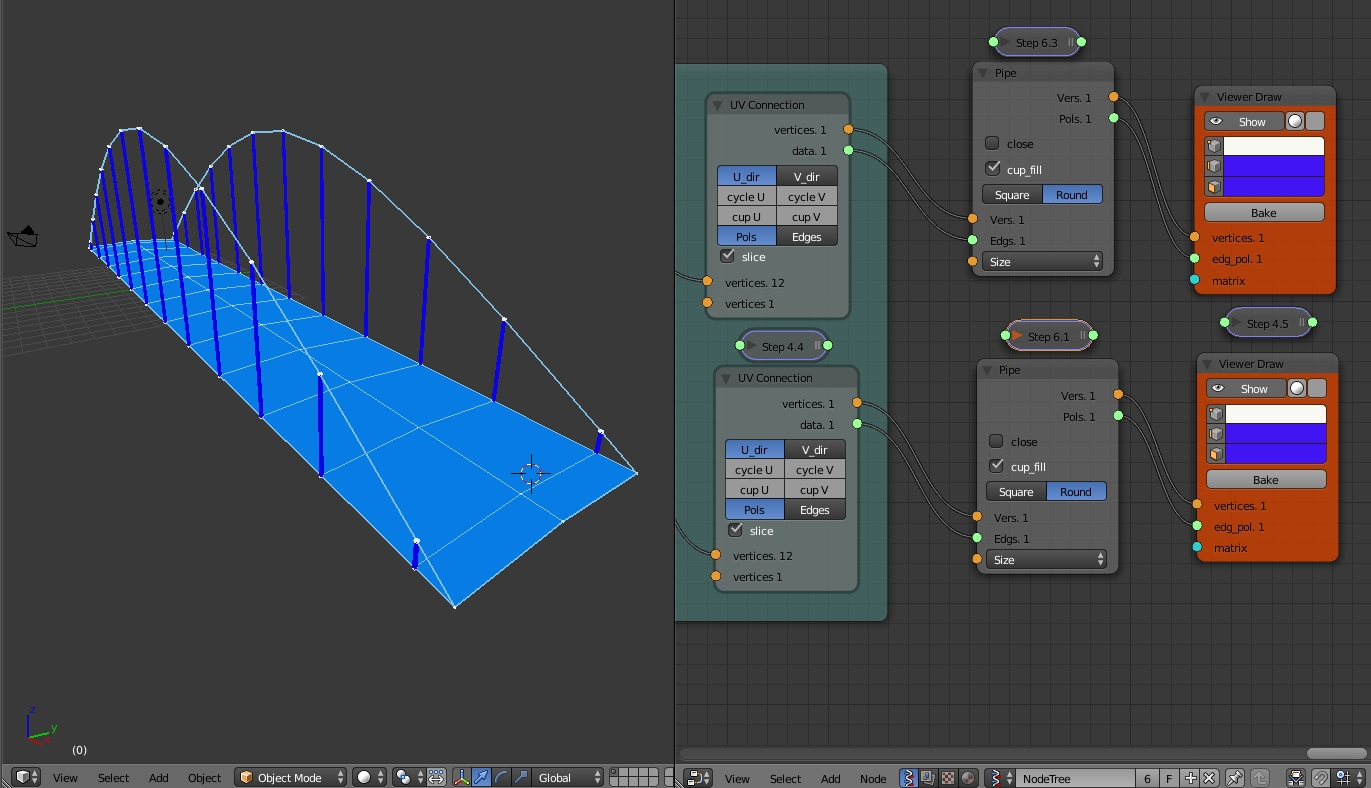

What’s that fuzz about geometry

Looking at the scientific discourse and, partly what we teach our students in engineering one might wonder about the above question a lot. We have very vibrant research and teaching lines in engineering that focus on computational geometry. Also in practice, we often see that the discussion around Building Information Modeling and related standards is to a great extent focusing on geometry (the 3D model as the equivalent of the Building Information Model).

Since quite some time I am wondering whether this focus is still relevant in light of the ever advancing BIM practice. After all BIM based design is parametric by nature, designers choose different building components (walls, doors, windows, roofs) choose parameters of the components (height, width, depth) and the computer automatically generates the geometry of the elements. Moreover, the computer not only generates one geometry, but many different geometric representations. This allows to represent the object in the multiple views (and levels of representational detail) that the software provides.

Considering that modern design practice moves more and more towards parametric design tools, I am wondering whether teaching and science about how to generate complex geometric models should move more and more into the domain of software developers.

Does it really make sense to represent geometry within our interoperable product model exchange formats? Or do we rather need to think better about ways to exchange the logical design parameters and leave it to the software engineers that implement the standards to think about the best way for geometric representation? Do we need geometric model checkers – or can these be replaces by software that can check parameters by large? Will engineers in the future start their design efforts by drawing geometry or will they directly jump into creating parametric networks of design logic and let the computer generate the geometry automatically for them?

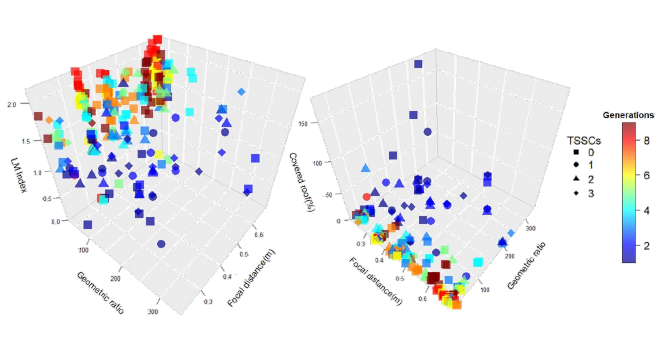

Design optimization: Searching for the Impact

Design optimization tools become readily available and easy to use (see for example Karamba or Dynamo. It is not surprising that studies exploring these tools are exploding. Many examples exist that illustrate how to design optimization models and execute optimizations. Often, however, these studies fail to provide the true impact that was expected in terms of improving the (simulated) performance of the engineering design. Showing that the deflection of a structure could be reduce by a centimeter or the material utilization used for the structure was reduced by some percent remains of course an academic exercise that can provide little evidence on the engineering impact of optimization technologies.

As we move forward in this field of research, we need to develop more studies that move away from simply showing the feasibility to apply relatively mature optimization methods towards formalizing optimization problems that matter. Finding such problems is not easy as we cannot truly estimate the outcomes of mathematical optimizations upfront. Whether a specific impact can be achieved can only be determined through experimentation – a long, labor extensive and hard process.

Even worse, identifying relevant optimization problems through a discussion with experts is difficult. The outcomes of each design optimization needs to be compared with the solution an expert designer would have developed using his intuition and a traditional design process. Hence, working with expert designers to identify problems might be tricky. After all they are experts and probably already can come up with pretty good solutions. It seems as one would rather need to identify problems that are less well understood, but still relevant. These problems might also be scarce as relevant problems are of course much more widely researched.

In the end, I think we need to set us up towards a humble and slow approach. An approach that is time consuming, that will require large scale cooperation, and needs to face many set-back in terms of providing an impact that truly matters. Maybe this is also the reason why a disruption of design practice is not yet visible. Until we will be able to truly understand how we can impact design practice with optimization we will still need to rely on human creativity and expertise for some time to come. (not saying that we should stop our efforts.