Looking at the scientific discourse and, partly what we teach our students in engineering one might wonder about the above question a lot. We have very vibrant research and teaching lines in engineering that focus on computational geometry. Also in practice, we often see that the discussion around Building Information Modeling and related standards is to a great extent focusing on geometry (the 3D model as the equivalent of the Building Information Model).

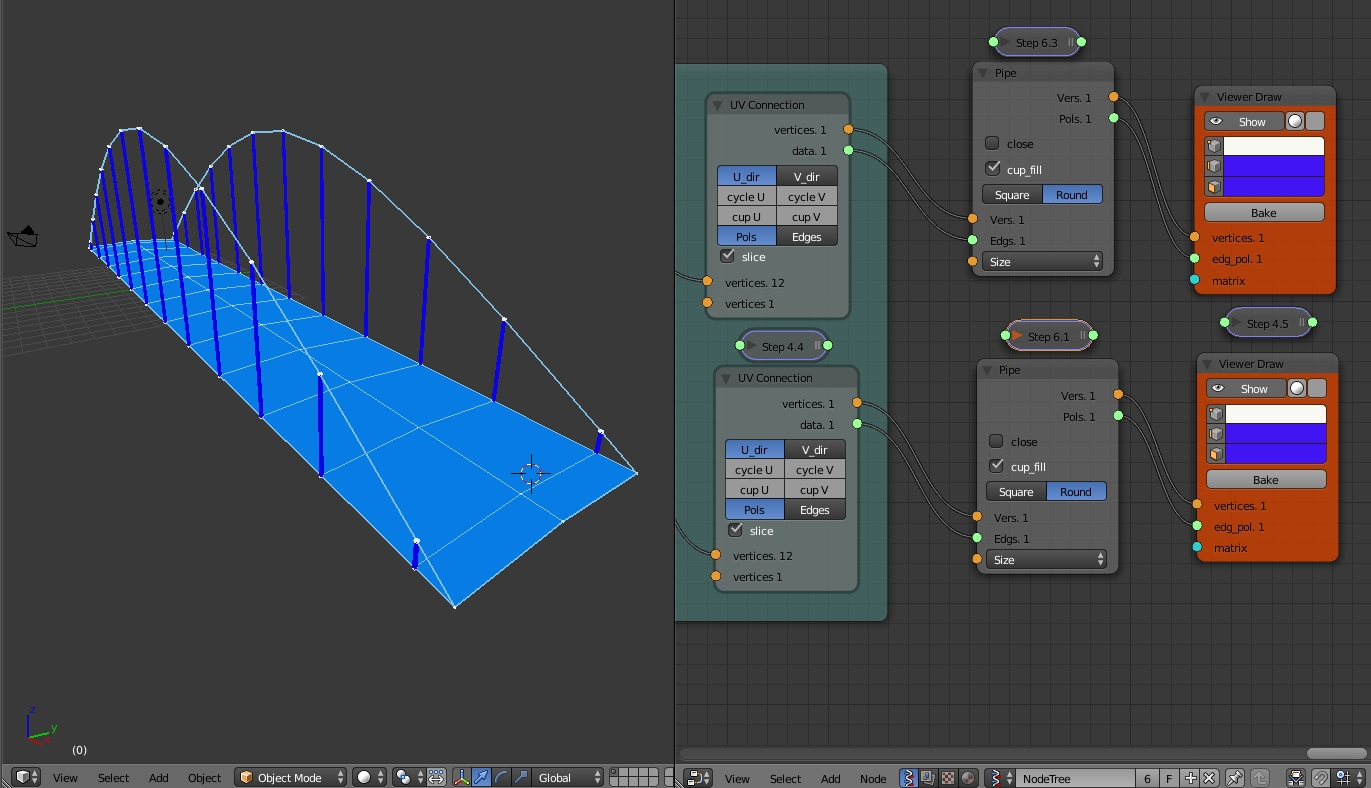

Since quite some time I am wondering whether this focus is still relevant in light of the ever advancing BIM practice. After all BIM based design is parametric by nature, designers choose different building components (walls, doors, windows, roofs) choose parameters of the components (height, width, depth) and the computer automatically generates the geometry of the elements. Moreover, the computer not only generates one geometry, but many different geometric representations. This allows to represent the object in the multiple views (and levels of representational detail) that the software provides.

Considering that modern design practice moves more and more towards parametric design tools, I am wondering whether teaching and science about how to generate complex geometric models should move more and more into the domain of software developers.

Does it really make sense to represent geometry within our interoperable product model exchange formats? Or do we rather need to think better about ways to exchange the logical design parameters and leave it to the software engineers that implement the standards to think about the best way for geometric representation? Do we need geometric model checkers – or can these be replaces by software that can check parameters by large? Will engineers in the future start their design efforts by drawing geometry or will they directly jump into creating parametric networks of design logic and let the computer generate the geometry automatically for them?